Creating a Leading PCIE Based Ethernet Host Interface Standard: Infrastructure Data-Plane Function (IDPF)

By Anjali Singhai Jain, Intel, co-chair of the IDPF TC

Why OASIS?

A few months ago, Intel NIC architects and members from Google, Red Hat, and Marvell decided to deeply explore the terrains of Peripheral Component Interconnect Express (PCIE) based Ethernet Host Interface standardization. We decided that a PCIE-centric standard was needed and thus we created IDPF. As an inventor and one of the founding architects of IDPF, I am aware that it is hard to justify the short term benefits of standardizing IDPF to my team, but we do see a long term benefit and I am grateful to my team at Intel and the rest of the founding member companies that they came along and trusted us in what we created.

OASIS, a world class open standards organization, made it possible for us to launch a PCIE-centric standard alongside other technologists. OASIS gave us the platform we needed to solve some difficult technical problems. For example, OASIS has a bevy of technical standards leaders with whom we could consult if we needed help. OASIS is widely regarded as a leading standards organization which allows us to easily seek help and build IDPF; we could easily reach out to Intel leaders internally who have worked with OASIS on other projects. We were also able to network with a global community of technologists who are passionate about standardization, which made creating and launching the IDPF educational, interesting, and challenging. When it comes to open standards, OASIS proves to be an international leader and provides a clear and direct path to standardization.

The Road to IDPF

Upon launching, there were some necessary hurdles to deal with such as why do we need a standard in this space, and if we do, why IDPF, why not something from scratch? There was a whole lot of perseverance and answering the hard questions. The wonderful support from OASIS definitely helped us get started. Every OASIS member is dedicated to excellence and making sure standards are fully equipped for de jure approval.

One reason a creator/inventor still carries forward is in the hope that either they will be able to create a thing of beauty used by many or the process will teach them some very important lessons about future courses of action.

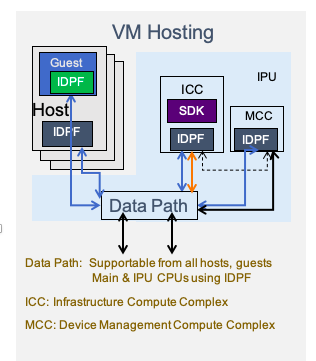

In today’s Network Interface cards whether smart or not, an IPU (Infrastructure Processing Unit) or DPU (Dataplane processing Unit), the Ethernet Host interface exposed to the General-purpose cores comes in two flavors:

1) A highly optimized performant Host interface for the card but often proprietary to the card vendor. Examples of Type 1 are mlx4/5 (Nvidia), ice/iavf (Intel) etc.

2) A vendor-neutral interface that is the requirement of the infrastructure provider that can be used in a Virtual Machine, container etc. More often than not designed by the device vendor but by either the infrastructure provider or something organic that evolves from the SW emulated device land to create level playing ground for all – and most importantly something that has the ecosystem around it to facilitate live migration of the Virtual machine. Examples of Type 2 are Vmxnet3, ENA, virtio-net etc.

The reason the vendors are forced to support both are simple; when deployed without the need for virtualization like in an enterprise setup or for small internal use by the Cloud or Telco’s, an optimized interface even if it is vendor-specific is the right interface as getting the best performance is the key metric. In a cloud deployment, the key metrics are : virtualization, migration-ability for maintenance reasons, ability to source the device from multiple vendors (given supply-chain issues that are unpredictable, relying on a single vendor is not the wisest business decision). The deployment is quite large and the promise of cloud to its client in an IaaS deployment is that they should never have to deal with Infrastructure related issues (that’s what the client’s offload onto the Infrastructure provider by paying a service fee) and hence the need for Type 2 vendor-neutral interfaces with a good ecosystem for live migration.

In a cloud model, there is one more key metric to keep in mind, the datacenter nodes have evolved to provide greater security, modularity and isolation required between the untrusted Client and the Services provider. There is also the element of zero-trust (trust nothing and nobody) between the client renting the VMs and the Infrastructure provider. Which means the same network interface card (FNIC/SNIC/IPU/DPU) should provide different levels of exposure to the device through Host interfaces exposed to the client and to the infrastructure provider’s Control plane, often the network card’s dataplane processing pipeline must split logically and securely in a way to accommodate for Infrastructure processing offload needs and also for the host side accelerations/offloads.

Additionally, one of the important things that are very important in designing a Client facing Ethernet Host interface is to have almost a balloon like interface, which starts small with a very tiny footprint towards the device that is most essential (think fast path) and the rest should be negotiated in a way so as to always be able to provide connectivity with more or less host side accelerations/offloads depending on what any device can offer and what the service provider deems important for a certain customer’s usage. Start small but leave a lot of room to grow. Not just for current host side accelerations/offloads but also for the future ones as the systems and use cases evolve. A lot of consideration has been given for performance (given a PCIE interface has its greatest bottleneck/cost/delay at the PCIE boundary), abstraction from the underlying device so that it can evolve without the need to change the Host interface, live migration aspects etc. Basically, IDPF takes the goodness of the two types of interfaces mentioned above and makes one such interface that can be standardized.

While designing IDPF, we kept all these aspects in mind. IDPF is a result of collaboration between an Ethernet device vendor with lots of experience in designing high performance and feature rich devices and host interfaces and a well-known Infrastructure Cloud provider with a very deep understanding of various usages and deployment models. We did keep in mind the aspect that each vendor will come with their uniqueness to offer from their device as well and left room for such OEM specific features to be negotiated and used when needed as part of the IDPF negotiation sideband to the device.

As part of this standardization effort, we realized what are some of the standard Ethernet acceleration and offload features that most vendors offer to the customer as they are time tested and ubiquitous for a modern-day Ethernet interface. Some of these features are stateless offloads such as checksum, receive side scaling etc., some others which have some temporary state on the device side such as segmentation offload, receive side coalescing etc. and others that are more stateful such as flow-steering, crypto offload etc. Some others that are supplemental such as Precision Time offload, Earliest Departure time (pacing of flows) etc. This is by no means an exhaustive list, but also a list that will grow as the standards committee for IDPF will review and make further additions, in a hope that this standard will stand the test of time and will evolve adding some new features and deprecating some as we move forward. For example, we hope to standardize an RDMA Host interface as part of this IDPF effort, sometime in the future.

As part of this IDPF standardization, we (Intel) will contribute a seed Spec with all details on what is expected from the device, a linux upstream kernel driver, an emulated userspace device and a DPDK open-source driver. We will also contribute heavily towards the live migration ecosystem for a VM using such an interface on a linux hypervisor.

Creating an interface that is both performant and meets all other cloud needs is hard but standardizing one way is harder. Needless to say, it’s for a good cause, so that many vendors can play together – and overall the customer benefits from it (Example role models: USB, PCIE, NVMe). And we in the Ethernet world move the technology forward, focusing on real problems and leaving behind problems of non-compliance. Our goal is to seed the Standard Ethernet Host interface with something that we believe we have poured our hearts and minds into. Hope this helps everyone, and we can all benefit from joining forces.

Participation in the Infrastructure Data-Plane Function (IDPF) TC is open to all interested parties. Contact join@oasis-open.org for more information.